All members of our research group are provided with a new laptop (MacBookPro), a large display, keyboard, and mouse of their choice. They are also given access to the following supercomputers:

- Fugaku (R-CSS)

- Summit (ORNL)

- ABCI (AIST)

- TSUBAME 3.0 (TokyoTech)

- Reedbush / Oakforest PACS / Oakbridge-CX (UofT)

In addition, a private cluster "Hinadori" is maintained within the lab.

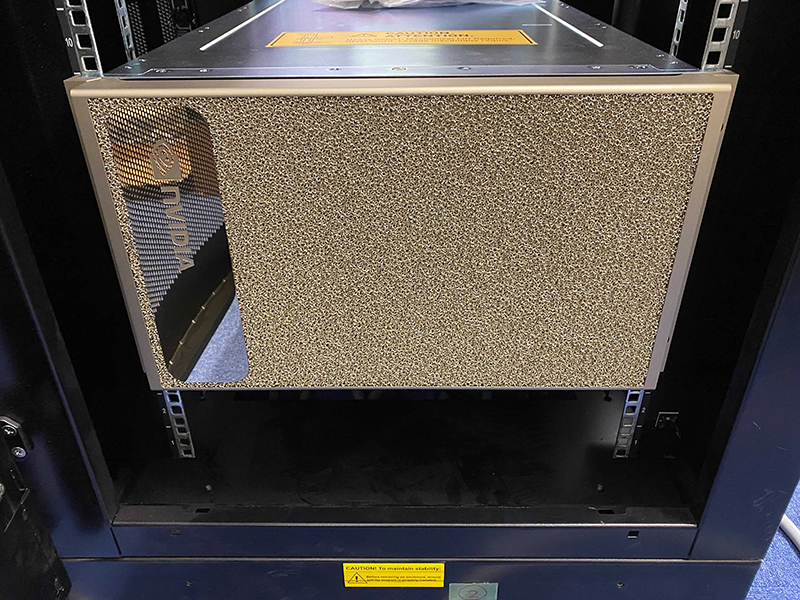

Hinadori Cluster

Hinadori cluster (Hinadori) is designed and operated to provide the latest environments that have yet to be introduced in supercomputers to conduct cutting-edge research within the lab.

Students take the initiative in determining specification, procurement, and operation.

Hardware

To conduct forefront research in HPC and Deep Learning, GPUs are necessary for daily research in Yokota Lab.

Hence, we provide multiple GPU servers (28 GPUs in total):

| CPU | ホストメモリ | GPU (1ノードあたりの搭載数 | 台数 |

| Intel Xeon Silver 4215 | 96GB |

NVIDIA GeForce GTX 1080Ti (2) |

4 |

NVIDIA GeForce RTX 2080 (2) |

2 |

||

NVIDIA GeForce RTX 2080Ti (2) |

1 |

||

NVIDIA TITAN V (2) |

1 |

||

NVIDIA TESLA V100 PCIe 16GB (1) |

1 |

||

Intel Xeon E5-2630v3 |

64GB |

NVIDIA TITAN RTX (1) |

1 |

AMD EPYC 7742 |

1TB |

NVIDIA A100 40GB SXM4 (8) |

1 |

AMD EPYC 7313 |

512GB |

NVIDIA A100 80GB PCIe (8) |

1 |

AMD EPYC 7453 |

512GB |

NVIDIA A6000 (8) |

1 |

AMD Ryzen Threadripper 3960X |

128GB |

NVIDIA A4500 (2) |

4 |

AMD EPYC 7402 |

512GB |

NVIDIA GeForce RTX 3090 (8) |

3 |

(2023.01.14 present)

Other features of Hinadori include:

- Login node as a bastion for SSH

- 100TB of SSD file-server (RAID10)

- 400TB of HDD file-server (RAID10)

- Private VPN service

These features enable students to conduct experiments remotely.

Hinadori also supports parallel computing with multiple computers using MPI by 10GbE.

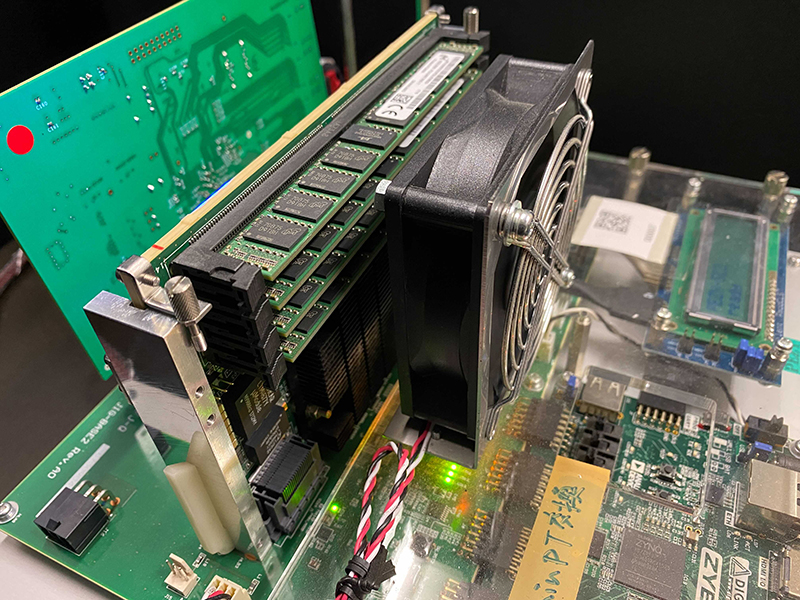

In addition, research using special processors, such as Intel KNL nodes and PEZY SC2, is performed.

Software

Job Scheduling System

Hinadori adopts a customized job scheduling system, with Slurm Workload Manager as the base, enabling users to submit jobs effortlessly.

It is equipped with a feature to make easy use of Slurm's ability to assign multiple jobs to a single node.

Monitoring System

Hinadori utilizes Prometheus to aggregate metrics, and Grafana to visualize such metrics.

Metrics that are monitored include the usage rate of each CPU and GPU that can be used for performance optimization and metrics such as usage history, GPU temperature, and power consumption for administration purposes.

All users can access this information via a browser.

Development Environment

Users can specify appropriate versions of CUDA libraries and compilers, which are managed by Environment Modules.

Besides standard applications, Hinadori provides internal applications such as one that records GPU temperature, power consumption, etc., during program execution.

Operation

Operations are done with one simple but important rule: "Don't waste time managing."

As the cluster is operated by students voluntarily, it is essential that we do not cut on research time.

Hence, we have introduced Ansible, a configuration management tool, IPMI, a remote management tool, and LDAP's SaaS for user management, to minimize maintenance time.

Setting up a new node is also automated, and users can start using it within 30 minutes after OS installation.